Understanding big O notation

Data Structure | Sat Aug 17 2024 | 2 min read

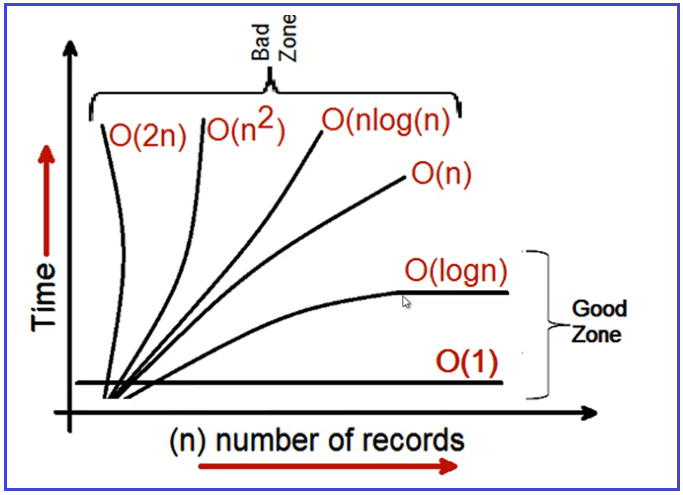

Big O notation is a mathematical notation used to describe the upper bound or worst-case scenario of the runtime complexity of an algorithm in terms of the input size.

In simple terms Big O notation helps us determine, "How code slows as data grows."

- Describes the performance of an algorithm as the amount of data increases.

- Machine independent.

- Prefer smaller operations, O(n + 1) -> O(n).

- Predicting Algorithm Behavior.

In Big O notation, the "O" refers to the order of growth of an algorithm's runtime. The notation is followed by a function that represents the upper bound of the algorithm's time complexity.

JavaScript

//Approach 1

const AddNumbers=(n)=>{

let result = 0;

for(let i=0; i<=n; i++) {

result += i;

}

return result;

}

//Approach 2

const AddNumbers = (n)=>{

let result = n * (n+1) / 2;

return result;

}

console.log(AddNumbers(10000));In the above example, using Approach1 time of execution will increase as we'll increase the number but using approach 2 it'll always remain same. hence, we can say approach one is linear so Big O will be O(n) and approach 2 will remain constant in terms of exection time, so Big O will be O(1).

- O(1):

- constant time

- random access of an element in an array.

- inserting at the beginning of linkedlist.

- O(log n):

- logarithmic time

- binary search

- O(n):

- linear time

- looping through elements in an array

- searching through a linkedlist

- O(n log n)

- quasilinear time

- quick sort

- merge sort

- heap sort

- O(n^2):

- quadratic time

- insertion sort

- selection sort

- bubble sort

- o(n!):

- factorial time